Robots.txt file is just a text document you can create using Windows programs and name it as robots with a file extension .txt however, when you place rules within this document and place it on web server root folder, then, you can better control crawling of search engine bots such as Googlebot.

Important to Note About Using robots.txt File

Most website owners will NOT need to use this file, nor waste time trying to learn how to Disallow or Allow certain sections of their website. Because using robots.txt file directives is not for controlling Google indexing URLs. The only real use cases for using robots.txt file rules are:

- If you want to let certain parts of a website not be crawled by user-agents such as Googlebot

- If Googlebot is requesting to many URLs thus causing server performance issues

Stop Wasting Time Trying to Create Robots.txt File for SEO

I know there are many so called SEO experts out there confusing business website owners about using robots.txt file for search engine optimization purposes. Do not use it for SEO. Simple.

Instead focus on creating useful engaging and optimized content for your ideal customers because that will bring better results for your business website. Once again, you would need to use robots.txt file NOT for SEO.

But rather to either control crawling of Google so that it doesn’t burden a web server with 1000’s of URLs, or, use it if Google Search Console complains about Submitted URL blocked by robots.txt

How to Create & Use robots.txt file Video Tutorial

Robot Exclusion Protocol Definition

The real definition is that its just a standard instead of it being a protocol. Robots exclusion standard, also known as the Robots Exclusion Protocol or simply robots.txt, is a standard used by websites to communicate with web crawlers and other web robots. Here’s a simple usage

user-agent: *

Disallow: /pathtofoldertostopcrawleraccess/

Disallow: /pathtofiletostopcrawleraccess.html

What is a User-Agent

Besides a browser, a user agent could be a bot scraping webpages (such as Googlebot), a website download manager, or another application accessing the Web. When we use the asterisk symbol * after user-agent: we signal to ALL Crawlers that obey the Robot Exclusion Standard rules (because some may not) within robots.txt file

What is robots.txt Disallow

Anytime you use Disallow: /path-to-URL you are telling user-agents to NOT access (as in crawl the URL). You can have many different Disallow rule sets.

What is robots.txt Allow

Anytime you use Allow: /path-to-URL-to-Allow-Crawl-Access you are granting user-agents to access (as in crawl the URL). You can have many different Allow rule sets. How can this be useful?

User-agent:

disallow: /entirefoldertoNOTaccess/*

Allow: /entirefoldertoNOTaccess/butgrantaccesstofile.js

You would only use Allow directive when certain parts of are disallowed, and yet, you need to allow access to some individual parts within the disallowed parts.

Where is robots text File Located?

For most self installed websites, the location of the file is the web server root (home) directory (usually within public_html). That means if a website is installed inside a subdomain, you do not place this file in subdomain or subfolders. It has to reside at root (home) directory.

I Can’t Find My ROBOTS.TXT File on My Web Server

Keep in mind that some Content Management Systems (e.g. Blogger, WiX) generate this file automatically (as in virtually). That means, you won’t find the actual file. Furthermore, some plugins may also generate this file automatically. That means, your web server home directory may not even have a file called robots.txt file.

How to robots.txt file Blogger

How to Disallow Subdomains

Because robots.txt file must reside inside the root (home) directory. And because web crawlers don’t check for robots.txt files in subdirectories. You will need to create robots.txt file NOT in subdomain folder, but you need to create one (SEE first video tutorial) in the root (home) directory of your web hosting server, and then use this format to disallow subdomains:

user-agent: *

Disallow: /subdomainfoldername/*

Notice the * symbol which takes care of everything within a subdomain. Also remember that subdomain is nothing more than subdirectory on a website.

robots.txt file WordPress Example

Below is a sample example you can safely use for all WordPress built sites

User-agent: Googlebot

Disallow: /cgi-bin/

Disallow: /wp-admin/$

Disallow: /author/admin/

Disallow: /wp-content/cache*

Disallow: */trackback/$

Disallow: /comments/feed*

Disallow: /wp-login.php?*

Disallow: *?comments=

Disallow: *?replytocom

Disallow: *?s=

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/admin-ajax.php

Allow: /wp-admin/admin-ajax.php?action=*

User-agent: *

Disallow: /cgi-bin/

Disallow: /wp-admin/$

Disallow: /author/admin/

Disallow: /wp-content/cache*

Disallow: */trackback/$

Disallow: /comments/feed*

Disallow: /wp-login.php?*

Disallow: *?comments=

Disallow: *?replytocom

Disallow: *?s=

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/admin-ajax.php

Allow: /wp-admin/admin-ajax.php?action=*

robots.txt file WordPress Example with Sitemap URL

User-agent: Googlebot

Disallow: /cgi-bin/

Disallow: /wp-admin/$

Disallow: /author/admin/

Disallow: /wp-content/cache*

Disallow: */trackback/$

Disallow: /comments/feed*

Disallow: /wp-login.php?*

Disallow: *?comments=

Disallow: *?replytocom

Disallow: *?s=

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/admin-ajax.php

Allow: /wp-admin/admin-ajax.php?action=*

User-agent: *

Disallow: /cgi-bin/

Disallow: /wp-admin/$

Disallow: /author/admin/

Disallow: /wp-content/cache*

Disallow: */trackback/$

Disallow: /comments/feed*

Disallow: /wp-login.php?*

Disallow: *?comments=

Disallow: *?replytocom

Disallow: *?s=

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/admin-ajax.php

Allow: /wp-admin/admin-ajax.php?action=*

#DELETE This Line. Remove #hashtags below

#Sitemap: https://CHANGE/page-sitemap.xml

#Sitemap: https://CHANGE/post-sitemap.xml

More Advanced Sample robots.txt file for WordPress

Do NOT use the sample below, as its provided to demonstrate complex usage

User-agent: Googlebot

Disallow: /cgi-bin/

Disallow: /wp-admin/$

Disallow: /author/admin/

Disallow: /wp-content/cache/*

Disallow: /wp-includes/*.php$

Disallow: /wp-includes/blocks/*.php$

Disallow: /wp-includes/customize/*.php$

Disallow: /wp-includes/rest-api/*.php$

Disallow: /wp-includes/rest-api/endpoints/*.php$

Disallow: /wp-includes/Requests/*.php$

Disallow: /wp-includes/Requests/*/*.php$

Disallow: /wp-includes/SimplePie/*.php$

Disallow: /wp-includes/SimplePie/*/*.php$

Disallow: /wp-includes/SimplePie/*/*/*.php$

Disallow: /wp-includes/widgets/*.php$

Disallow: */trackback/$

Disallow: /comments/feed*

Disallow: /wp-login.php?*

Disallow: *?comments=

Disallow: *?replytocom

Disallow: *?s=

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/admin-ajax.php

Allow: /wp-admin/admin-ajax.php?action=*

Allow: /wp-content/themes/rankya/*.css

Allow: /wp-content/themes/rankya/*/*.css

Allow: /wp-content/themes/rankya/js/*

Allow: /wp-content/themes/rankya/*/*/front*

Allow: /wp-includes/js/wp-em*.js

Allow: /wp-includes/js/*-reply.min.js

Allow: /wp-includes/js/jq*/*.js

Allow: /wp-content/uploads/*

User-agent: *

Disallow: /cgi-bin/

Disallow: /wp-admin/$

Disallow: /author/admin/

Disallow: /wp-content/cache/*

Disallow: */trackback/$

Disallow: /comments/feed*

Disallow: /wp-login.php?*

Disallow: *?comments=

Disallow: *?replytocom

Disallow: *?s=

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/admin-ajax.php

Allow: /wp-admin/admin-ajax.php?action=*

Disallow: /2016/

Disallow: /2017/

Allow: /wp-content/themes/rankya/*.css

Allow: /wp-content/themes/rankya/*/*.css

Allow: /wp-content/themes/rankya/js/*

Allow: /wp-content/themes/rankya/*/*/front*

Allow: /wp-includes/js/wp-em*.js

Allow: /wp-includes/js/*-reply.min.js

Allow: /wp-includes/js/jq*/*.js

Allow: /wp-content/uploads/*

User-agent: Googlebot-image

Disallow:

Sitemap: https://www.example.com/page-sitemap.xml

Sitemap: https://www.example.com/post-sitemap.xml

Learn More About How Google Treats Robots.txt Rules

When you want to control how Google’s website crawlers crawl and index publicly accessible websites, understand the fact that you only use robots.txt file to control crawling as opposed to Google indexing. Google like most good behaving web crawlers obey rules within robots.txt file

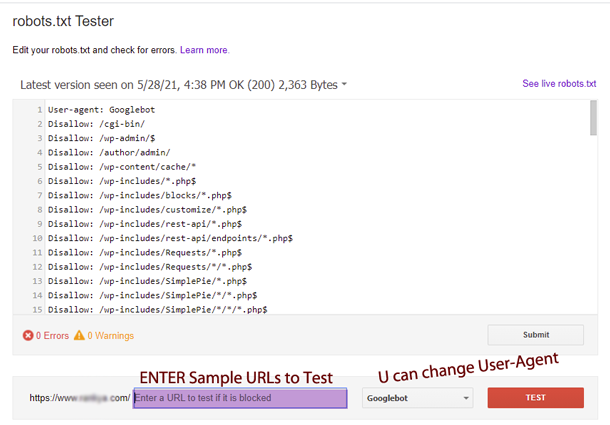

How to Test robots.txt Rules in Google Search Console

Simply visit Search Console Robots Testing Tool and select your verified website property. It will then take you to Legacy Tools for testing your robots.txt file directives.

Simply visit Search Console Robots Testing Tool and select your verified website property. It will then take you to Legacy Tools for testing your robots.txt file directives.

Where is ur rankya file manager i saw on a video?

Our websites has been updated recently, as a result, certain links and files shown in our video lessons may no longer be available. We encourage you to check out the latest tutorials https://www.rankya.com/google-search-console/how-to-fix-blocked-by-robots-txt-errors/ and you’re welcomed to comment for more information.

Through this blog, I got amazed that this is one of the most important things. we can say Thanks for elaborating in such a great manner.