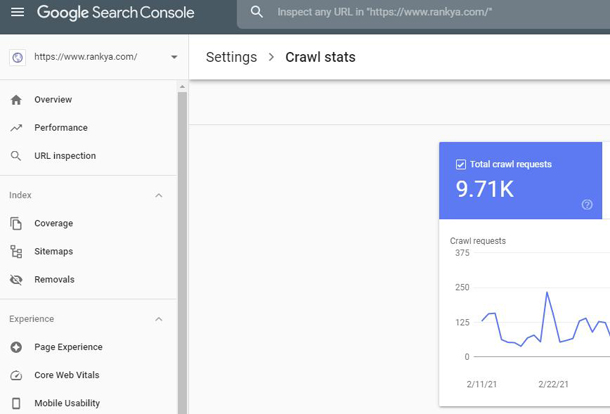

Google’s ability to fetch and crawl web pages is incredibly fast and efficient, the crawl stats provides insights for

Pages Crawled Per Day

Kilobytes downloaded per day

This option will show you how much Kilobytes (thousand bytes) has been downloaded while crawling your web pages

Time spent downloading a page (in milliseconds)

Time in milliseconds Google’s crawl bots has spent while downloading the page per day

What is Important When Analyzing Google Search Console Crawl Stats?

The Crawl Stats page should be viewed for any unusual activity for Google’s crawling process. This is true with most of the settings you analyze Google Search Console. Meaning, Google Webmaster Tools is mainly used for identifying errors and issues.

Think of it this way, if you are publishing content on regular basis, then Google will allocate certain crawl budget. The more content you publish on regular basis will then mean, it will crawl your website more often. The less you publish content will then mean, less crawling from Google. In terms of increasing Google rankings, you should publish blog posts regularly.

Can You Increase Google Crawling Requests?

Although its best to let Google work out when and how often it should crawl your website, you can increase the crawl process by making sure that your website uses XML sitemap and gives cues for Date Modified information for example:

<lastmod>2017-05-18T01:36:50+00:00</lastmod>You can also use Structured Data to give further cues about Published and Updated Dates for your blog posts

Below code is used on WordPress blog post time information, although now has datePublished and dateModified schema markup item properties

<time itemprop="datePublished" class="entry-date published" datetime="%1$s">%2$s</time><time itemprop="dateModified" class="updated" datetime="%3$s">%4$s</time>What is a Web Crawler?

Web Crawler (also known as user-agents, spiders, web fetchers, bots) is a generic term for any computer program used to automatically discover and scan websites by following links from one webpage to another. Googlebot is Google’s main web crawler, and you can use that crawler name to override many others.

However, keep in mind that depending on your website requirements, you may want to use different directives for different Google crawlers

A website is having total 300 pages, where 290 are blog post and 10 are usually contact us, about us pages. Every two to three days the blog post is updated. So what should be the crawl rate, As I took a look in GWT it showed me 1000 to 2700 in range..

When a site is having 300 pages, so why Google is crawling 1000 to 2700 pages in variation, I also used site operator and it showed me 305 pages indexed, even same result in GWT in index tab.

Does crawl rate is different??? or do Google crawl same pages ??? Can you please suggest me??

Hello Sam, with Google Search Console Crawl settings, its guide for Google. That means, what Google will do is do its own thing for crawling a website. In your case, it seems that your site is small small (that means you should not set Crawl Rate in Google Search Console) and simply focus on creating more and more content. The only time a crawl rate should be changed through Search Console is IF the site is being resource depleted due to crawling.

How can this happen? Let’s imagine that a website is very very busy with many people visiting each day and that a web server is on shared hosting (with other websites using the same resources CPU, RAM, MySQL) and also if the website is not fully optimized. Then, what will happen is (most often the site may go down to resource usage issues IF Googlebot is also making many HTTP requests. So therefore, Google search console crawl rates are there for this scenario to slow down Googlebot crawling.

Now that is cleared, its more than okay for site:yoursiteexample.com to show extra URL’s indexed in Google (you can remove those URL’s using noindex rules)